Welcome to EFFIGIES, a weekly newsletter offering actionable insights from my journey through reading and writing comics, designed to inspire you towards building a better life. To become our best selves, we must burn away who we are today.

What’s Inside:

- My recent struggles with focus and my journey to solve those issues.

- Cyborg, of Teen Titans fame, captures the consuming nature of technology.

- How technology, especially social media, is distracting us and much worse.

- To be more focused and happier, we need to understand the value we want.

As a writer, the currency of my craft is not just words, but focus too. Over the last few years, though, I’ve noticed that it’s gotten harder and harder for me to sit and write for an extended period of time. There’s always some notification to check or some comment to respond to. There’s always some digital distraction.

I was justifiably concerned by this realization. If I can’t sit and write, I’m not a writer. So I needed to find a solution. That search led me down a deep rabbit hole, and, like Alice, the deeper I ventured, the more disturbing things became.

In the interest of complete transparency, this newsletter will travel down a pretty dark path, tackling some heavy topics, like genocide and suicide. By the end of that trek, though, I think there’s some light. I think there’s an opportunity for us to better manage our relationships with tech, to achieve our goals, and to live a better lives.

Cyborg’s Knightmare.

A while back, I was reading Knight Terrors: Titans byAndrew Constant and Scott Godlewski. In the series, we are shown a “nightmare” version of the classic Teen Titan member Cyborg, in which his human side has been completely taken over by the technology that gives him his superpowers.

Given my struggles with focus lately, Cyborg’s dilemma struck a chord. His technology, a tool he once used to be a hero, to save people, has become a prison. In our digital lives, the constant barrage of notifications, messages, and sometimes more harmful content, can be their own prison from productivity and, even, happiness.

But who is building these digital prisons, why, and how are they affecting us?

The Chaos Machine.

Businesses exist exclusively to capture value, through our attention or through money. Every Wednesday, we go into the comics shop and trade $3.99 for 20-22 pages of entertainment. That transaction is harmless, but not all businesses are as innocuous as your friendly local comic shop.

Let’s try a quick experiment: If you have the Facebook app, open it. Did you have any notifications? Did they excite or disappoint you? Was your feed interesting? If not, did you feel the urge to refresh for better content?

In his 2022 book, The Chaos Machine, New York Times investigative reporter Max Fisher reveals how tech and social media companies exploit human psychology to influence users’ behavior. If you’ve ever been to Las Vegas, the experiment we did above probably felt familiar. In the book, Fisher explains how social media uses unpredictable reward cues to keep us engaged:

Facebook and the other socials are engineering apps and algorithms to maximize how we engage with their platforms. Through unpredictable notifications and social rewards they capture our attention. They win when we’re distracted, and that’s the root of the focus issue. But how bad could a little doom-scrolling really be?

To answer that question, we need to talk about rats.

Rats, Dopamine, and Outrage.

In a 2014 study, researchers demonstrated that rat brains can be hacked to produce addictive behavior. In the study, Rats were repeatedly shown a light cue, followed by a 50% chance of receiving a reward of sugar water (mimicking slot machine payout rates).

This repeated exposure to the light cue and random rewards triggered dopamine release in the rats’ brains. Dopamine, a neurotransmitter crucial to the brain’s reward system, creates feelings of satisfaction and motivation, encouraging the repetition of rewarding behaviors.

Interestingly, the dopamine release was triggered by the light cue, not the reward, showing that anticipation of a potential reward drove the rats’ excitement.

Some other studies I read so you don’t have to:

- A study published in Nature explained how our brains are wired to prioritize and engage with negative information.

- A 2021 study out of Yale showed that moral outrage behavior, when reinforced with positive social signals, was more likely to be repeated in the future.

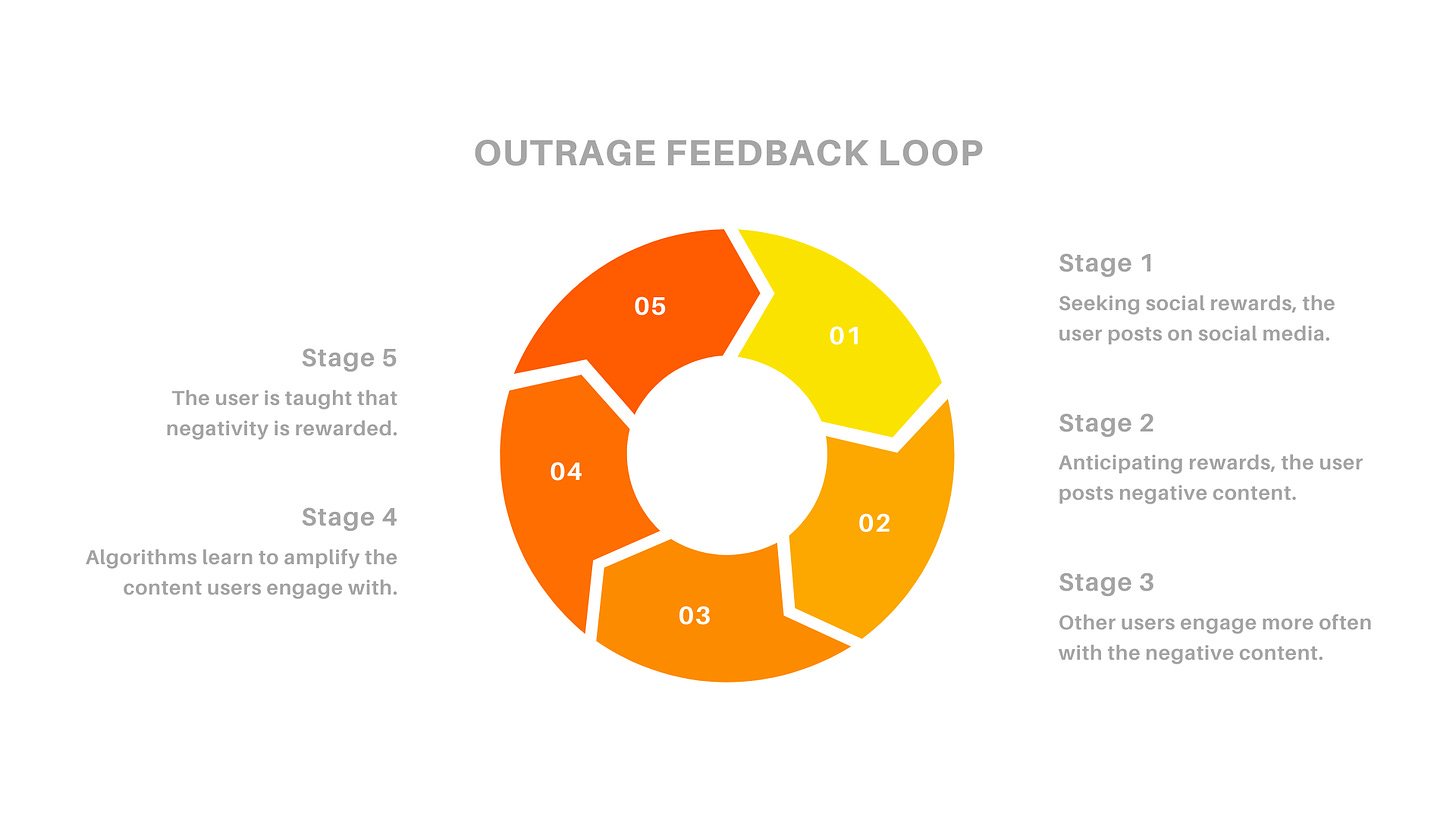

Now, let’s put it all together with some things we know to be true about social media:

- The anticipation of rewards (likes, comments, etc.) releases dopamine.

- Social media leverages random rewards to create addictive user behavior.

- Humans are biased toward engaging with negative content like moral outrage.

- Algorithms learn what users engage with, then reward and amplify that content.

- When users are rewarded more for negative content, the behavior is repeated.

That, my friends, is what we call a reinforcing cycle.

But again, how bad can it be? It’s just social media right? Make no mistake, the Outrage Feedback Loop, or as I like to call it “The Doom Loop” is bad.

In fact, it’s deadly.

The Doom Loop

In the latter half of The Chaos Machine, Fisher documents how the 2017 genocide in Myanmar and the 2018 genocide in Sri Lanka were fueled by misinformation perpetuated on Facebook via The Doom Loop.

It goes without saying, but genocide – the purposeful elimination of human life at scale – is humanity’s most abhorrent creation. Yet, it’s happened twice in recent memory, at least in part, because a social media company saw more value in maximizing user engagement than in protecting human life.

More recently and a bit closer to home, comics lost New York Times bestselling writer and artist Ed Piskor. After allegations against him went viral on social media, Ed took his own life. He left a note, which I read, and one passage has haunted me ever since:

The vitriol against Ed wasn’t a surprise, though. Fisher boils it down to an apolitical format, a repeatable script we’ve seen over and over:

I didn’t know Ed, and I’m not here to comment on what he did or did not do, but this tragedy was avoidable. As someone who’s seen first-hand the ferocity with which the The Doom Loop can create a dopamine-fueled feeding frenzy, I know this was avoidable.

But the tech companies aren’t going to change. Honestly, they’ll probably get worse. So the burden to change is on the users — it’s on us — but the question is, how do we go about making that change?

Cyborg Revisited.

In Knight Terrors: Titans, we’re shown a nightmarish portrayal of the dangers of unchecked technology. In the story, Cyborg is trapped in a ‘techno-nightmare,’ where his human side is overwhelmed by his machine half. As we read on, though, we see that Cyborg is rescued from this nightmare when he is shown his reflection in a special mirror.

The act reveals his true self. He sees the version of himself who has a purposeful, well defined relationship with his machine half. That version isn’t shackled by technology. He leverages technology to achieve his goals, to be a hero. And by reclaiming that relationship, he is freed from his digital prison.

Digital Minimalism.

Cyborg’s transformation in Knight Terrors: Titans brings me to second book I mentioned earlier, Digital Minimalism by Cal Newport.

Newport is Computer Science professor at Georgetown University who writes about focus and productivity, making him one of the best-equipped people on the planet to help navigate the question in front of us.

If literal atrocities cannot move tech companies to meaningful change, nothing will. There is genuine value in these technologies, though, so how do we positively negotiate the relationship between the two? How can we be more like Cyborg and use technology to achieve our goals while avoiding it’s darker aspects like The Doom Loop?

In the book, he suggests a simple idea for how we might approach our relationships with technology:

Newport asks us to reframe our relationship to technology as something that supports what we already value (our goals and happiness), rather than as the value itself.

Framework: Technology Partitioning.

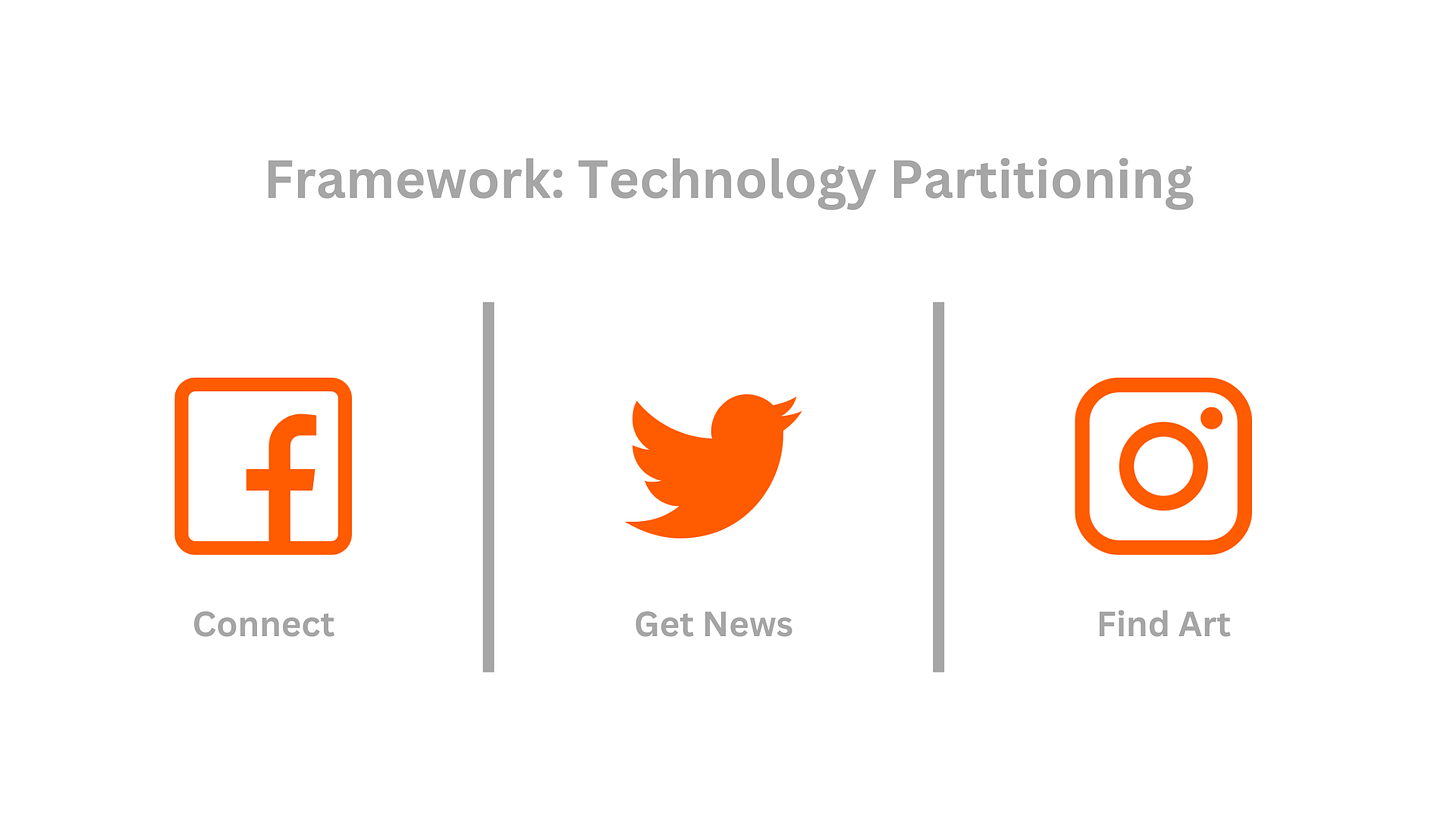

Ironically, we can look to technology, itself, for a helpful framework to put Newport’s ideas into action. In computers, the process of hard drive partitioning creates siloed spaces for things like operating systems, applications, and personal files, allowing the various parts of your computer to perform their functions optimally.

With two simple steps, we can use this strategy can be used to define our relationships with technology:

- Understand your goal and the kinds of value that help you achieve it.

- If technology is additive, extract value; if not, eliminate it from the process.

Bringing this all the way back around, at the end of the day, my goal is to make great comics. Understanding how technology helps me achieve that goal allows me to partition it accordingly. For social media, here’s how I partition the Big 3 platforms:

When social media helps me connect with other comics folks, know what’s going on in the industry, or find talented collaborators, it’s a powerful resource. When it’s doing anything else, it’s just a distraction. So I recognize where where my value is, and taking a play out of the social media companies’ playbook, I capture as much of that value as is possible.

Regardless of what value you get from social media and other technologies, I promise you, The Doom Loop will not help achieve your goals or increase your happiness. In any way whatsoever. But armed with the framework above, you can define your relationship with the technology in your life. If you do, you will achieve more, be happier, and the world will be a bit better.

-Frank

I’m Frank Gogol, writer of comics such as Dead End Kids, No Heroine, Unborn, Power Rangers, and more. If this newsletter was interesting / helpful / entertaining…

The Gentleman’s Agreement

If you read this post and got some value from it, do me a solid and subscribe to my newsletter below. It costs nothing and it’s where I do interesting writing, anyway.

Frank Gogol is a San Francisco-based comic book writer. He is the writer of Dead End Kids (2019), GRIEF (2018), No Heroine (2020), Dead End Kids: The Suburban Job (2021), and Unborn (2021) as well as his work on the Power Rangers franchise.

Gogol’s first book, GRIEF, was nominated for the Ringo Award for Best Anthology in 2019. Gogol and his second book, Dead End Kids, were named Best Writer and Best New Series of 2019, respectively, by the Independent Creator Awards.